This pivotal and comprehensive presentation explores how open source software has revolutionized technology, collaboration, and innovation from the 1960s to present day.

This blog post is based on a thought-provoking Data Umbrella webinar featuring Juan Luis Cano Rodríguez, a prolific open source contributor. In this talk, Juan Luis takes us on a journey through the cultural, philosophical, and technical milestones of open source software—from its hacker roots to its current role in the age of AI. But its story is much more than a timeline of programming languages and licenses— it’s a story about people, ideals, communities, and the evolving meaning of “freedom” in the digital world..

📺 Watch the Full Webinar!

This 75-minute video is a must-watch! It provides the evolution of open source, with all its values and challenges.

Slides: on Zenodo

Timestamps

00:00 Data Umbrella introduction

03:38 Juan Luis introduction

05:14 Juan Luis begins presentation

06:11 About Juan Luis

07:25 Disclaimer

09:20 1979: the rise and fall of hacker culture

11:44 1969: Bell Labs, Unix, C

14:15 1976: Copyright Act (in USA)

17:09 1979: Scribe markup language

18:05 1980-1989: GNU Project and Four Freedoms

18:45 Richard Stallman printer incident at MIT

22:00 Four Freedoms

25:24 Switzerland, CERN

25:55 1990-1998: The Linux bazaar and the Open Source definition

26:20 1991: World Wide Web

27:00 1993: Mosaic: first web browser

27:45 Linux (Ref: video, Intro to the Linux Operating System)

31:11 Python and more (R, Vim, Lua, Java, etc)

33:04 1997: The Cathedral and the Bazaar (an essay)

34:43 Netscape, JavaScript

35:45 1998: Open Source initiative

37:55 The Divide: why open source misses the point of free software

38:55 Digital age and the Big Data Explosion

40:10 dot com bubble

41:15 2001: animosity towards open source

42:00 orgs, Foundations: Apache, Linux, Python Software Foundation, Eclipse

44:04 2003: Google File System

44:45 2005: a new era of collaborative software development (Git, GitHub)

47:08 2011: Why software is eating the world

47:41 2012-2018: what do open source maintainers eat?

48:44 Growth of Python

49:30 open source is not sustainable (OpenSSL, Heartbleed, left-pad)

51:43 Nadia Eghbal, Roads and Bridges

52:17 open source system begins to fragment (licenses)

56:15 2019-2023: Post-open source and the Gen AI volcano

01:00:04 a new kind of open source license

01:00:55 2024: What’s next?

01:04:00 Q: Do you think the open source divide was avoidable?

01:05:36 Q: Are you optimistic about the future of open source in terms of funding?

01:07:45 Q: Web 3 and Blockchain

01:09:25 Q: Can you discuss OSPO’s? (Open Source Program Office)

01:12:30 Q: What do you think of the potential for the UFDA (user friendly developers association) model?

In this blog, we trace the history of open source software, starting from the early days of room-sized computers and academic code sharing, through the founding of the GNU project and the free software movement, the explosive growth of Linux and the World Wide Web in the 1990s, the rise of GitHub and big data in the 2000s, to the complex challenges of sustainability, ethics, and generative AI that define today’s landscape.

This post is based on a webinar hosted by Data Umbrella, a community-funded nonprofit supporting underrepresented voices in data science. Featuring a deep and thoughtful talk by Juan Luis—product manager at QuantumBlack, AI by McKinsey and a longtime advocate for the PyData community—we’ll walk through the key milestones, cultural shifts, and philosophical debates that have shaped open source into what it is today.

Whether you’re new to open source or a seasoned contributor, this journey offers insights not just into the software we use every day, but into the values, struggles, and future possibilities of the open technology movement.

The Genesis of Computing and Hacker Ethos in the 1950s and 60s

Let’s begin at the dawn of computing, exploring what we might call “the rise and fall of hacker culture” – a subjective framing, as emphasized by Juan Luis. So as I said I’m going to start with the very beginning of the history of computing. I call this session the rise and fall of hacker culture and just to make it very clear that this is a subjective perspective… The 1950s and 60s were a world away from today’s computing landscape. Programming involved punch cards and other “arcane methods” to input programs into room-sized computers like the PDP-10, a prominent machine in research and university settings. One such machine was the PDP-10, a staple in university and research departments, as shown in this photograph.

Crucially, early programming was largely an academic pursuit. At the very beginning of this story programming was mostly an academic activity. Languages were low-level, with some code even written directly in assembly language. Debugging cycles were extremely long, and computing wasn’t yet ready for widespread business applications.

We’re talking about very low level languages, some programs were still written in Assembly, and the limitations of the time meant that development was slow and labor-intensive. But the interesting thing is that this academic notion of sharing knowledge, publishing articles, and exchanging ideas permeated the world of computing, having a profound effect on everything that followed.

Sharing code was common practice in the 60s and early 70s, even through physical means. While “open source” or “free software” weren’t yet defined terms, the ethos of sharing was the prevailing norm. So interestingly it was very normal in the 60s and beginning of the 70s to just share the codes, you know, with analog methods back then—but even though there was no notion yet of open source or free software, it was still pretty much the norm.

The GNU Project and the Foundational Four Freedoms of Free Software (1980s)

The 1970s saw a shift as programmers began imposing restrictions on software, moving away from the open academic culture. An anecdote from Richard Stallman, then at MIT, illustrates this change. When using a markup language called Scribe, Stallman encountered “time bombs” placed by the author to prevent unlicensed use. This sparked outrage, not against charging for software, but against restricting user freedom. So with all these things in mind and you know towards the end of the 70s programmers were increasingly imposing restrictions on the software and the academic culture that was in place in the 50s, 60s, 70s of just sharing the code worry-free and so on was starting to fall and there’s one anecdote that I found quite interesting from Richard Stallman we’re going to talk about him in a moment at the time he was a young student at MIT and he was using a markup language called Scribe and the author of such system Brian Wright placed some time bombs in the source code so that users could not access an unlicensed copy of the software basically and apparently the reaction was that this was a crime against humanity not necessarily charging for the software but restricting the user freedom…

This sentiment fueled the GNU Project, initiated by Richard Stallman in the 1980s. Now we arrive to the 80s and we are going to talk about the I want to talk to you about the GNU project and the four freedoms which is still a precursor of the open source movement which is the center of this talk but it’s crucial to understand everything that came after that. So the GNU project was an endeavor that Richard Stallman started…

Another key event further motivated Stallman: losing access to the source code for a new office printer, rendering his custom printer enhancements useless. and then apparently there was one anecdote with an office printer at MIT that kick-started everything so Richard Stallman had added some custom code to the previous printer they had so that every time someone was printing a document and would send a message to the administrator and also if there were too many jobs in the queue of the printer it would send a message to the users that were waiting for documents to be printed basically and this was an additional development that was not provided by the printer vendor and that Richard Stallman did and then at some point the department decided to buy a new printer and suddenly did not have access to the source code so his initial development to add all these messaging systems and so on was rendered useless and apparently this was the moment Mr. Stallman realized that retaining user freedom and protecting it was the most important thing he wanted to devote his life to.

In 1983, Stallman announced the GNU project, aiming to create a “complete Unix compatible software system” and give it away “free to everyone.” So Mr. Stallman sent an email to different mailing lists in 1983 that said starting this Thanksgiving I’m going to write a complete Unix compatible software system called GNU for GNU not Unix and give it away free to everyone who can use it contributions of time money programs and equipment are greatly needed and this is considered the beginning of the GNU projects… The goal was a full operating system, requiring both a kernel and user-facing utilities. Stallman’s emphasis on “free” was crucial – “free as in freedom, not as in free beer,” a distinction often lost in translation, especially in English where “free” conflates both concepts. and there is one word in this announcement that’s critical because Mr. Stallman said that he wanted to give it away free to everyone who can use it and Richard Stallman has spent most of his life after this email clarifying that he meant free as in freedom and not as in free beer and I find that completely fascinating because I’m a Spanish native speaker and in Spanish the word free and gratis are two different words but of course this distinction doesn’t exist in English so it’s so interesting how language can condition these misconceptions

The GNU Manifesto (1985) and the Free Software Foundation (FSF) (1985) followed, solidifying the principles of the GNU project and user freedom. So after starting with the GNU project developments Stallman wrote the GNU manifesto in 1985 and he established the Free Sober Foundation and non-profits that would protect the interests of the GNU projects and use of Freedom. This organization still is active to this day and in fact Richard Stalman is still the head of the organization almost 40 years on. In 1986, the FSF articulated the Four Freedoms of Free Software: And then in 1986 the Free Software Foundation wrote the four Freedoms and these were the underpinnings of everything that came after that in the Free Software movement.

- Freedom 0: The freedom to run the program for any purpose. So Freedom 0, because of course they were programmers and they wanted to start with zero, is the freedom to run the program for any purpose. This means that nobody can restrict for what do you want to use the software for and we’re going to see that this has some implications that still reverberates to our day.

- Freedom 1: The freedom to study and change the program. Freedom 1 is the freedom to study and change the program which was of course primarily useful for hackers themselves because at the moment any software would not work for whatever reason and they would want to see the source code, possibly change that and so on.

- Freedom 2: The freedom to redistribute copies. And then Freedoms 2 and 3 refer to the freedom to redistribute copies of the software so essentially the moment you have a software you should be able to give it away to your friend or neighbor.

- Freedom 3: The freedom to distribute modified versions. And finally the freedom to distribute modified versions of the software therefore if you are studying a software and you have the freedom to change it as well you should also have the freedom to distribute those modified versions to other people.

These freedoms are meant to be retained by users. This principle led to copyleft licenses like the GNU Public License (GPL), ensuring these freedoms are passed on to everyone who receives the software. The consequence of that is that the licenses that the GNU project created which are called the GNU public licenses or DPL and they’re said to be copyleft licenses in the sense that these four freedoms are transmitted in a transitive way so everybody that receives a copy of the program must be able to retain these freedoms otherwise they would be in breach of the license. The challenge of pricing software while upholding these freedoms has spurred diverse business models, a puzzle many still grapple with. And in fact the difficulty of putting a price tag to software while retaining all these four freedoms has given birth to lots of different business models and this is still something that lots of entities and companies and freelance developers are trying to figure out.

Around the same time, in Switzerland, Tim Berners-Lee at CERN began envisioning a new information system to organize documents, which would later become the World Wide Web. Meanwhile in Switzerland by the end of the 80s there was someone called Tim Berners-Lee that was starting to imagine how a new information system would look like to organize the documents of CERN the particle accelerator that’s across Switzerland and France and this would give birth later on to what we today call the World Wide Web.

The 1990s: The World Wide Web, Linux, and an Explosion of Innovation

The 1990s marked a period of rapid acceleration. So now we enter the 90s and this is where the things start getting interesting and accelerating a lot. The pace of innovation becomes so intense that a linear timeline becomes difficult to maintain.

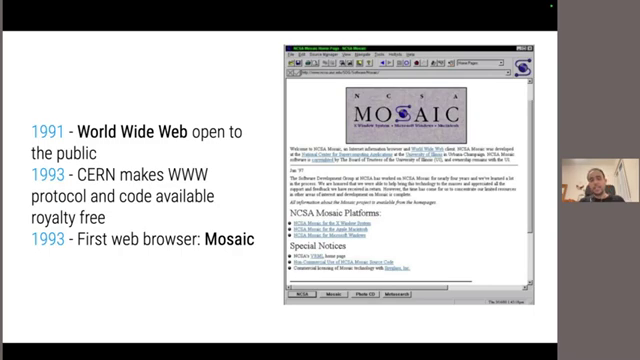

In 1991, the World Wide Web was released to the public by CERN. So in 1991 the World Wide Web was open to the public it had been in development for some years already as I mentioned… Crucially, CERN made the web protocol and code freely available, extending the principles of software freedom to the very foundation of the web. and the truly interesting thing is that CERN made the www protocol and the code available reality free. So in a sense they took these ideas of software freedom and so on and they applied them to something as foundational as the web. This decision laid the groundwork for the public digital infrastructure we rely on today. The first web browser, Mosaic, appeared shortly after, paving the way for countless browsers to come, all built upon the foundational elements of HTML and HTTP. And what you see here on screen is a screenshot of the first web browser ever which is called Mosaic and this is Mosaic owns websites back how it looked in 1997 and this of course was only the first of many web browsers that came after that but the elements that are common to all of them so the HTML markup language HTTP as a protocol and so on were already there back at the beginning of the 90s.

1991: Linux

Also in 1991, Linus Torvalds, a Finnish computer science student, announced Linux. At the same time a young computer science student in Finland Linus Torvalds also in 1991 sent an email to a few mailing lists saying I’m doing a free operating system… Initially conceived as a hobby project, Torvalds aimed to create a free operating system for 386-80 clones, distinct from the GNU project. While the GNU project had made significant progress on utilities, their kernel, GNU Hurd, was still under development. and if you remember from the GNU project the original idea for the GNU operating system needed two things the kernel and the utilities. The utilities had been seeing lots of progress during the late 80s and so on and they had basically a re-implementation of the Unix system that they already knew from their interaction with these old computers I mentioned at the very beginning but they were not quite ready to have a kernel they had a project called the GNU herd that to this day is still in development and hasn’t gone very far.

The combination of the Linux kernel and GNU utilities resulted in the GNU/Linux family of operating systems, often simply called Linux. The “coolest thing about Linux” was the rapid emergence of numerous Linux distributions. but the truly revolutionary thing here is that by combining the Linux kernel as it came to be named and the GNU utilities we had the GNU slash Linux family of operating systems that I’m going to be calling Linux for the rest of the presentation for brevity and the coolest thing about Linux is that at that point dozens and dozens of Linux distributions started to appear. These distributions bundled desktop environments, GNU utilities, and other software. Slackware, the first Linux distribution, appeared in 1993, followed by Debian in the same year, which became one of the most influential distributions, parent to Ubuntu, Linux Mint, and many others. remember that Linux was announced in 1991 in 1993 there was the first distribution of Linux called Slackware and when I say distribution here I mean a collection of desktop environments the GNU utilities and some extra stuff then in the same year in 1993 the Debian distribution was created and this came to be among the most successful Linux distributions ever and parent to Ubuntu Linux Mint and many others

The mid-90s saw the rise of commercial Linux distributions like SUSE Linux and Red Hat Enterprise Linux. and in 1994 and 95 there were two commercial Linux distributions and this is really interesting because they in a way they were fulfilling the dream of rich installment of having software that could be free as in freedom but at the same time they could be build a viable business on top of it while still distributing the source code so these two distributions are SUSE Linux and Red Hat Enterprise Linux… These distributions demonstrated the viability of building businesses on free software while still adhering to the principles of source code distribution, fulfilling Stallman’s vision.

Beyond the web and Linux, the 90s witnessed an explosion of new programming languages:

- 1991: Python (Guido van Rossum) and R (Ross Ihaka). so in 1991 Guido van Rossum, a programmer and mathematician from the Netherlands created Python which is a software that I’ve been working with for half my life so I owe it my whole professional career basically and also in that same year the R programming language was created by Ross Ihaka, a researcher in New Zealand…

- 1991: Vim editor (Bram Moolenaar). also Bram Muehlenar, a programmer from the Netherlands created the Veeam editor and it’s still popular to this day

- 1992: X Window System ported to Linux, enabling graphical interfaces. in 1992 the X window system was ported to Linux which made it possible to have visual interfaces on Linux very early on

- 1993: Lua scripting language (Roberto Ierusalimschy et al.) and LaTeX (Leslie Lamport). in 1993 some researchers from Brazil Roberto Yerusalimch and many others created the scripting language Lua that is very popular for the video game community and Leslie Laporte from the USA created LaTeX

- 1990s: Numeric and Numarray, precursors to NumPy. and Python was already gaining traction for the scientific numerical community and both numeric and numeric was created and this was the precursor of NumPy which appeared some years after that

- 1996: Java (James Gosling). and in 1996 Java was created by James Gosling from Canada](https://www.youtube.com/watch?v=LLciYo3rqTQ&t=1969s) and this came to be one of the most important enterprise programming languages of the following two decades

This flurry of activity culminated in Eric Raymond’s influential essay, “The Cathedral and the Bazaar” (1997).

So you see all these things were happening at the beginning of the 90s and this all culminated in 1997 with the writing of the essay The Cathedral and the Bazaar by Eric Raymond. Raymond contrasted the “cathedral” model of traditional free software development, conducted behind closed doors, with the “bazaar” model exemplified by Linux. In the bazaar model, development was open, with public mailing lists and transparent patch management. and so the idea from Eric Raymond here was that the previous generation of free software had been developed behind closed doors so everybody could get the codes and everybody could get the four freedoms but in but in between releases nobody could see the intermediate stages of the software and the mailing lists were private and so on and this is what Raymond called the cathedral model and then he dubbed the Linux development model which was completely different because everything was happening in the open and Linux was sharing how he was or was not merging patches and so on and he called these the Bazaar models… Raymond also formulated Linus’s Law: “Given enough eyeballs, all bugs are shallow,” advocating for transparency in development and highlighting the weakness of “security by obscurity.” and he coined what he called the Linux law which says given enough eyeballs all bugs are shallow which is a way of saying that security by obscurity is not the way to go and instead we should strive for transparency and the more transparent we are the sooner we’re going to find the problems in our codes and again this is one idea that still reverberates to our time and I find it so fascinating that all of this was a consequence of these exciting periods at the beginning of the 90s.

From Dot-Com Bubble to Open Source Definition: A Turning Point

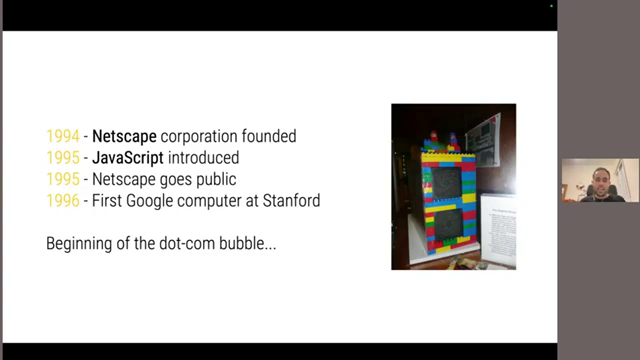

The 1990s also saw significant business developments that would reshape the landscape. In 1994, Netscape Corporation was founded, producing the Netscape Navigator web browser. However there were also some interesting happening interesting things happening on the business side of things that will have tremendous consequences as well. So in 1994 the Netscape Corporation was founded to produce a different web browser that was called Navigator, so Netscape Navigator. Netscape introduced JavaScript in 1995 and went public that same year, marking the beginning of the dot-com bubble. They introduced the JavaScript programming language in 1995 and later that year the company went public so this was considered the beginning of what would in the end be the dot-com bubble and we’re going to see what the consequences were of that. Concurrently, young Stanford students Sergey Brin and Larry Page started developing the foundations of Google. At the same time some very young students in the Stanford University, Sergey Brin and Larry Page had just started creating the very basics of what then would become Google, one of the most powerful tech corporations these days.

This period culminated in 1998 with the formalization of the term “open source”. This all activity culminated in 1998 when the open source term finally became official. Inspired by Raymond’s essay, Netscape released the source code for its browser, a move that resonated throughout the tech industry. This open Netscape Navigator ultimately became Firefox. So influenced by Eddie Raymond’s essay, Netscape released the source code of the browser and this was so shocking and unusual that it sent waves through the tech industry and motivated lots of enthusiasts and technologists to try to channel that energy. This open Netscape Navigator was the precursor of Firefox which is again a browser that we still use to this day.

A strategy meeting, attended by Eric Raymond, Bruce Perens, and others, addressed the confusion surrounding “free software.” Concerns arose that the requirement for transitive freedoms in free software licenses was deterring corporate adoption. So there was a strategy meeting and several people were present there and so Eddie Raymond himself, Bruce Perens who is there in the picture and many others and they were discussing about the fact that this terminology, this free software thing was too confusing and also there were hints that the fact that these freedoms had to be transitive so they were forced to transmit them to downstream users that was not very interesting for corporations. Christine Peterson suggested “open source” as an alternative term, one already in limited use. Bruce Perens and Eric Raymond then founded the Open Source Initiative (OSI), and Tim O’Reilly organized an “Open Source Summit” to promote the new terminology. So Christine Peterson from the United States in that through the immediate suggested using the term open source instead of free software which was a term that was already in use at the end of the 1980s and beginning of the 90s but she is credited with coming up with the idea of using it more broadly. So Bruce Perens then with the help of Eddie Raymond founded the open source initiative and that same year Tim O’Reilly organized what he called an open source summit and they started spreading the word about the new terminology and all these new ideas.

A crucial difference emerged: the open source definition did not mandate transitive freedoms. Interestingly the open source definition did not mandate that these freedoms are transmitted to users and so this created a divide in the community… This philosophical divergence created a lasting divide between “free software” and “open source” proponents. While Stallman initially considered embracing “open source,” he ultimately recognized this key difference and dedicated himself to advocating for free software, leading to a rift that persists within the community. because even though Richard Stallman at some point had considered embracing the open source terminology and at some point he realized that there was a subtle but very important philosophical difference between the two in that open source was not guaranteeing the downstream freedom of the users and so sadly he devoted a very large part of his life to fight open source proponents and hence this divide was created and that still is mentioned by many members of the community to this day.

The 2000s: Open Source Foundations and the Dawn of Big Data

As the 90s ended and the new millennium began, the digital age truly dawned. Historians often mark 2002 as the turning point when digital data storage surpassed analog. This explosion of digital data paved the way for the “big data era.” So we’re about to finish the 90s and we’re about to enter the new millennium and I want you to fixate on this image on the right because it might not seem too long ago but the truth is that what we today take for granted, so having everything on digital storage, is something that’s relatively new and historians usually consider 2002, so 22 years ago, the beginning of the digital age. So up to that point there was more information stored in analog means than in digital means but then after that point there was an exponential growth in hard drives, CDs, DVDs, even digital tape and so on. So that was the moment that marked the true beginning of the digital era and with this explosion of data everywhere came of course the big data era but we’re going to get to that in a moment.

The dot-com bubble burst around 2000, a dramatic moment that, despite initial disappointment, ultimately reflected market dynamics. Before that it’s my turn to talk about the dot-com bubble. So with the advent of the new web and all these open source technologies and the miniaturization of computers and so on, there was this fever and everybody wanted to get into the internet and do digital business. So this created a bubble that reached its peak in 2020 and then most of these companies in the lapse of two years or less, they completely disappeared or saw their value wiped off by more than half. So this was a very dramatic moment that was seen by many as a big disappointment in the potential of the internet but in the end it was the dynamics of the market. In 2001, corporate hostility towards open source peaked, exemplified by Steve Ballmer’s (Microsoft CEO) infamous statement that “Linux is a cancer.” In 2001, the corporate animosity towards open source reached its peak and it’s impossible not to remember this quote by Steve Ballmer who was the CEO of Microsoft back then who took over from Bill Gates and he said at some point that Linux is a cancer that attaches itself to everything it touches.

However, the open source community was organizing. The 2000s saw the establishment of key open source foundations:

- 1999: Apache Software Foundation, underpinning foundational data technologies like Apache Parquet and Apache Arrow. So in 1999 the Apache software foundation was established and this is still thriving to this day and underpins lots of the data fundamentals such as Apache Parket and Apache Arrow and so on.

- 2000: Linux Foundation, supporting Linux kernel development. In the year 2000 the Linux foundation was established and they employ Linux tutorials to this day and they’re a very successful coalition of companies pushing for development of the Linux kernel.

- 2001: Python Software Foundation, managing the Python language and organizing PyCon. In 2001 the Python software foundation was established also in the USA and they started creating the trademark for the language and soon after organizing the PyCon and so on.

- 2004: Eclipse Foundation, influential in the 2000s. And back in Europe in 2004 the Eclipse foundation was established which was also very influential especially in the 2000s.

Major tech companies, challenging Microsoft’s dominance, began embracing open source principles. In 2003-2004, Google published papers on the Google File System and MapReduce, outlining scalable, distributed computing concepts. But also the contenders for Microsoft’s dominance were embracing these knowledge sharing ideas which was accelerating innovation to a pace that had never been seen. So in 2003 Google released this Google file system paper and the next year they released the MapReduce ideas. These papers, though academic, demonstrated the cost-effectiveness of scaling out computing tasks across multiple machines. In 2005, Yahoo! implemented these ideas in the open, leading to the 2006 release of Hadoop. However, in 2005 one of the big tech giants at the time, Yahoo!, started implementing those ideas and they did that in the open. So this committed in the release in 2006 of Hadoop, which then became the basis of the whole big data craze for the next 10 years. Hadoop became the cornerstone of the big data movement for the next decade, enabling petabyte-scale data processing with open source technology. Hadoop is not a project that is very popular these days, but it was nevertheless the first wave of technologies that enabled companies to process petabytes of data using fully open source technology.

Another pivotal open source project emerged from Linus Torvalds in 2005: Git. Frustrated with a proprietary version control system, Torvalds created Git in just one month. And finally, something that was extremely influential as well was happening on the side. So in 2005 Linus Torvalds from the Linux kernel fame was pissed because they were using a proprietary version control system and they were enjoying a free license, but at some point the company took the license from them and they revoked it. And so Linus Torvalds took some time off and he wrote the Git distributed version control in one month. I find this extremely impressive. While Git itself is a command-line tool without a built-in collaboration layer (Linux kernel development relied on mailing lists for patch exchange), the need for collaborative open source development platforms was growing. And you know, Git is a tool, it’s a command line tool, but it had no collaboration layer. In fact, the way the Linux kernel is developed is over mailing lists and people exchange software patches on there with the lines they want to modify and so on. Which back then was a very advanced system and to the best of my knowledge they still keep using that. However, for projects that were smaller in scale than the Linux kernel, having all this collaboration layer was very annoying. This need was met in 2008 with the launch of GitHub. And in 2008 GitHub launched and this has become the biggest and most important repository of open source and free software ever. GitHub became the largest open source repository, embodying Raymond’s “Bazaar” model by making development history, issue tracking, and pull requests transparent and accessible. And the interesting thing is that this puts the idea of Eric Raymond’s Bazaar into practice, because now on GitHub you can see all the history of commits, you can open issues, you can send a pull request, essentially you can see all the development process in the open. So this fulfilled the dream of spreading open source far and wide, but also it introduced these social aspects into the development.

In 2011, Marc Andreessen, Netscape co-founder, penned the influential article “Why Software is Eating the World,” further fueling the open source movement and attracting a new wave of innovators. In 2011, which is the cutoff point that I chose for this section, Marc Andreessen, who was also one of the founders of Netscape some years prior, wrote this article, Why Software is Eating the World, which again reverberated with the tech industry and unleashed a new wave of innovators that were drawn to the fresh world of open source software.

Challenges to Sustainability and the Fragmentation of Open Source in the 2010s

The 2010s brought a new set of challenges to the open source ecosystem. And so we reached the past decades, 2012 to 2018. I’m going to accelerate now a little bit because by this time and judging by the demographics of the Data Umbrella, most of us were already alive and some of us were already even contributing to open source. And this is where some cracks started to appear in the whole system. While software was “eating the world,” and open source was “eating software,” a critical question arose: “what do maintainers get?” The xkcd comic depicting the fragility of the digital infrastructure, reliant on the unpaid efforts of a lone maintainer, captured this growing concern. So there was this notion that software was eating the world and later on that open source was eating software, but what do maintainers get was the question that everybody was asking. And there is this famous xkcd cartoon that depicts the whole modern digital infrastructure depending on a project that some random person in Nebraska has been thanklessly maintaining since 2003. And to be honest, the reality is not far off from that.

The decade saw the explosive growth of Python, fueled by the data science boom and libraries like pandas (released around 2010) and scikit-learn, the dominant classical machine learning framework. So during this decade we had an immense growth of the Python programming language driven mostly by the data science craze. So bear in mind that the pandas, the library created by Wes McKinney, was unveiled in 2010, 2009 more or less. And during this time Python became the number one option to do all sorts of data manipulation. And at the same time scikit-learn became the most important classical machine learning framework in existence. However, the unsustainability of open source became increasingly apparent. Built largely on volunteer time, projects initially conceived as hobbies were now critical infrastructure. Vulnerabilities like Heartbleed in OpenSSL, a project powering secure web browsing and often maintained by a single person, highlighted this fragility. But while all these things were happening, the world was discovering that open source was not sustainable at all. So it was built on top of maintainers’ free time. And you know in the 80s and 90s, all of these projects started as fun projects that in the words of Linus Torvalds you know would never become big and professional. And people were doing them for fun to scratch their own itch, to learn with their peers and so on. But suddenly we had all this massive infrastructure that was built on top of this very fragile projects and some vulnerabilities to appear. So for example OpenSSL which powers most of the secure web browsing that happens today had a vulnerability that was very easy to exploit. And that day the world realized that OpenSSL was basically maintained by one person.

The left-pad incident in 2016 further underscored the precariousness of the ecosystem. Another example of something that happened was this left pad story. A programmer, Azer Koçulu, removed his tiny JavaScript library, left-pad, from npm, breaking countless projects dependent on it. So basically Asel Kösülü, a programmer that was living in the USA, pulled left pad which was an extremely short JavaScript library. A library is so short that the whole source code could fit in like 11 lines of code as you can see here in the screenshots. And he was pissed because of some of the decisions of the node package manager and one day he decided to remove his library. And it turns out that thousands and thousands of projects were dependent on that and the continuous integration systems of virtually all the web ecosystem, web front-end ecosystem, completely broke. This event exposed the deep dependency chains and single points of failure in the modern web ecosystem.

Nadia Eghbal’s work, including her book “Working in Public,” provided crucial insights into the organization and motivations of open source maintainers. In 2016 Nadia Eghbal, a former lawyer from the USA who became super interested in the whole open source, wrote this seminal book, wrote some widgets with the fourth foundation in which she explained all the ways in which open source projects were organizing, how maintainers were motivated to do what they were doing and so on. And it was a essential piece of work that helped us understand how this whole ecosystem was evolving.

Towards the decade’s end, the open source ecosystem began to fragment. Businesses, while benefiting from open source, sought to protect themselves from competitors leveraging their code. This led to the emergence of “non-open source” licenses like the Business Source License, Commons Clause, and Server Side Public License. Finally, towards the end of the past decade the open source ecosystem began to fragment in two opposite directions but for many different for very similar reasons. So on one hand businesses that were using open source or even copyleft licenses started to see that their competitors were taking their code and competing with them basically. And some companies saw this as normal but some others were not so happy with the outcome. So lots of non-open source licenses started to appear. So we had the first source in 2015, the business source license from Aliadb in 2016, the commons clause in 2018, the server-side public license in 2018. These licenses restricted commercial competition, often by limiting Freedom 0 (freedom of purpose), preventing users from reselling the software or offering managed services based on it. While intended to protect innovation, they were rejected by the OSI as not truly open source. All of these licenses one way or another were restricting the competition. So for example they’re telling you that you can take the code, you can do whatever you want with it, but they were restricting freedom zero, freedom of purpose and telling you that you could not take that code and sell it for example or you could not take that code and do a managed service on top of it. It was a tool for companies to protect their innovations. But you know there was a period of confusion during these years because they tried to pass these licenses as open source because maybe they were open in spirit but the open source initiative had very clear guidelines of what the open source definition was and they rejected all the requests to consider any of these licenses through the open source.

Conversely, concerns arose about open source being used for unethical purposes. This led to the development of “ethical licenses” like the Cooperative Non-violent License and the Hippocratic License. At the same time on the other side of the spectrum people were concerned that open source software was being used for nefarious purposes. So for example there’s a family of projects that created the copyleft licenses and these essentially are a family of copyleft licenses but they mandate that only cooperatives and other non-capitalistic actors could use the code. And then Alakorawin in 2018 she created the Hippocratic license or do no harm license after she saw that GitHub was working closely with ICE, with the Department of Immigration of the US government and she wanted to create a license that developers could use to put their code out there and make sure that this would never be used for purposes of war, discrimination and so on. These licenses, while driven by ethical considerations, also restricted Freedom 0 by limiting the permissible uses of the software, and were similarly rejected as open source by the OSI. And they also tried to submit this as open source licenses in fact Coraline ran for the open source initiative election at some point but again they were restricting Freedom Zero so they were putting constraints on the purpose that was allowed for the software so again these are not considered open source licenses and the OSI rejected them all but we started to see this tension emerging right here. These developments highlighted the growing tensions and fragmentation within the open source world.

The Post-Open Source Era and the Impact of Generative AI (2019-2023)

In a surprising turn, 2018 saw a reconciliation between historical adversaries as Microsoft, once critical of open source, embraced it by acquiring GitHub. And then in 2018 old enemies became friends and Microsoft who had said 20 years prior that Linux was a cancer was embracing open source for once. So Satya Nadella who was the next CEO after Steel Bomber completely changed the culture of Microsoft and in 2018 they acquired GitHub effectively becoming the biggest code repository in the world. Under CEO Satya Nadella, Microsoft shifted its culture and became the owner of the world’s largest code repository.

Entering the period of 2019-2023, we find ourselves in a “post-open source era,” significantly impacted by the rise of generative AI. And now 2019-2023 I only included one album cover here because we’re in the middle of the decades so I’m hoping to discover some new music in the coming years and of course we are now in the post open source era and being affected by the generative AI volcano. Generative AI models like DALL-E, Midjourney, Stable Diffusion, and ChatGPT have become ubiquitous. And I’m just going to say a couple of words about this because there’s truly no need to say anything else about generative AI you’ve seen all of it all over the place you’ve probably played with some of these systems Dali, Dali 2, Mid-Journey, Stable Diffusion but also ChatGPT and all the derivatives and so on.

A critical challenge arises: generative AI companies face lawsuits regarding copyright infringement. OpenAI, the creator of ChatGPT, acknowledged that training useful AI models is impossible without copyrighted material. But one really important thing is that these companies are now facing some lawsuits from journalists, artists and so on and OpenAI the company that’s behind ChatGPT recognized that it’s impossible to create useful AI models without copyrighted material. This brings us back to the 1976 Copyright Act and fundamental questions about copyright in the digital age. Current generative AI models were trained on datasets scraped from the internet, including copyrighted images and code, often without explicit consent. And I find this so fascinating that we’re going back to 1976 and the Copyright Act in the USA and essentially questioning what does it mean for copyrights in the modern era you know. I’m sure some of you have had maybe depending on where you live some problems when you were downloading torrents or piracy movies or tv series and things like that. But it turns out that the current generation of generative AI models was created from data sets made of scraping the whole internet and using images without consent because you cannot retrieve consent at scale like that and using source code that was on GitHub and that had very strong licenses for example strong copyleft licenses.

This raises complex questions. For example, is code generated by GitHub Copilot a derivative work under GPL, requiring copyleft licensing? So there’s a very interesting question now about what happens when Copilot for example gives you a chunk of code. Is that a derivative work by the definition of the GPL and as such should you release that under a copyleft license? Generative AI models, like Copilot and ChatGPT, operate as “black boxes,” obscuring the contributions of digital pioneers from previous decades. So suddenly Copilot, ChatGPT and so on have become black boxes that somehow shield us from all the effort that the digital pioneers put in the 80s 90s and the zeros and so on and we’re still in the process of figuring out how should we proceed next.

The computational resources needed for training and running these AI systems are immense. Reportedly, Sam Altman seeks seven trillion US dollars to compete with Nvidia in GPU production, a staggering figure that dwarfs national GDPs and global needs like ending world hunger. And also the amount of computational resources that training and even using the system the systems requires is just out of this world. So some admin reportedly is looking for seven trillion US dollars of funding, these are American trillion and he wants to create a competition against Nvidia so that OpenAI and other companies do not depend on vidias GPUs to train and do inference with these generative AI models. But if you put this seven trillion into perspective with anything the GDP of your country or the budget that we will need to end world hunger or anything like that it’s just astonishing. So whether or not we will be able to gather these resources in a moment in which we’re starting to face water scarcity climate change and so on is up to debate.

The future remains uncertain. A current initiative, involving Chad Whitaker and David Kramer from Sentry, is exploring new license terms that are “open source in spirit” but allow companies to protect innovation while contributing to the software commons. We’re right in the future as we speak there’s this thread that is active, I am slightly participating on it, that is trying to figure out what term should we use for licenses that are not really open source but are open source in spirit and companies want to use them to protect their innovations while at the same time give back to the software commons. So this is a coalition led by Chad Witakre and David Kramer from Sentry, there’s lots of other companies involved and there are many names that have been proposed and you can make your own contribution and write history just by commenting on GitHub. This initiative invites community contribution on GitHub.

The path forward is unclear – potential outcomes include the proliferation of non-open licenses, copyright lawsuits against AI models, or even a redefinition of copyright itself. I’m going to leave it here, I think I spoke a lot, I truly hope that you liked it. What’s next? Nobody knows, only a big question mark. This could be a proliferation of non-open licenses, this could be lots of lawsuits against DNA models or maybe a redefinition of how copyright even works. We are at the forefront of this evolution, with the opportunity to shape the future, just like the pioneers who built the digital infrastructure we rely on today. The truth is that nobody knows but we are here, we’re at the forefront of it and we can make a dent on this and become one more with these pioneers that have been building the digital infrastructure for the past 50 years.